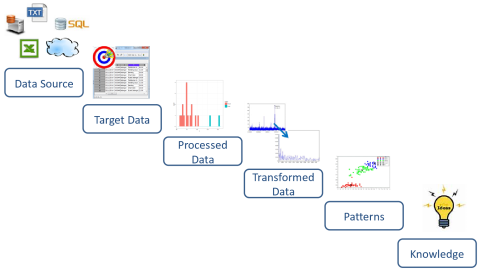

Typical processes of data mining

This article has been kindly contributed by Tan Chin Luh of Tritytech.

—

Data mining is the computer-aided process to search through and analyse huge sets of data and trying to understand the meaning of the data. The used of data mining is wide, especially in the era of big data and internet of things.

A powerful and flexible tool for data mining which could perform data mining is always a need for data analysts. However, the existing tools always come with some costs – expensive and closed source.

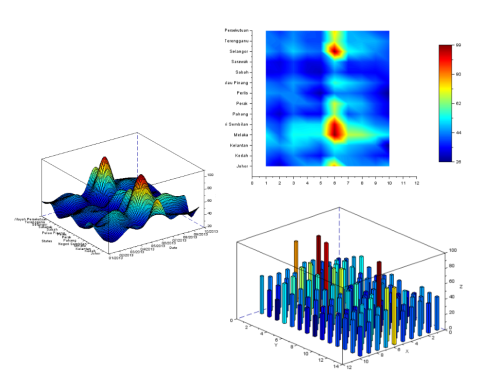

Scilab provides tools for data mining purposes, from the connections to the databases to the analysis tools and visualisation tools.

Stage 1: Data Sources

Scilab by itself could deal with various data sources such as text, excel, csv, and others. With the capabilities to link with other languages, Scilab would be able to talk to almost any data sources. For example, modules JSON allows the user easily convert the json object to the Scilab data type, and the Scilab interface with Java allows users to interface with the SQL database with the API.

Stage 2: Data Processing

Processing data is an important stage before jumping into data visualization or modeling. Irrelevant data could cause wrong model which yield to the wrong interpretation of the data. The Scilab matrix operation allows the users to easily extract the targeted data with indexing, and process the data with a few lines of commands. Modules available also allow the data to be transformed to other domains such as frequency domain for a different view of the data.

Stage 3: Data Interpretation

In order to understand the data, we could apply different methods. From understanding data with statistical analysis of data to exploring the data with artificial intelligence methods such as fuzzy logic, neural network and support vector machine, Scilab is able to assist the users on these tasks.